The demand for powerful AI capabilities is driving an unprecedented need for GPU compute resources. However, as GPUs become increasingly challenging to obtain, particularly due to supply chain constraints, companies are facing obstacles in securing the necessary infrastructure to support their AI initiatives. In response to these challenges, platforms like Inference.ai are emerging to provide innovative solutions for matching AI workloads with cloud GPU compute resources.

Addressing GPU Supply Chain Constraints

Nvidia’s high-performance AI cards have experienced significant demand, leading to supply shortages. Additionally, chipmakers like TSMC have signaled potential constraints in general GPU supply until at least 2025. The scarcity of GPUs has prompted concerns about anti-competitive practices, prompting investigations by regulatory agencies like the U.S. Federal Trade Commission. Amidst these challenges, the tech industry is witnessing a shift towards custom chip development by major players like Meta, Google, Amazon, and Microsoft.

The Role of Inference.ai in GPU Compute Provisioning

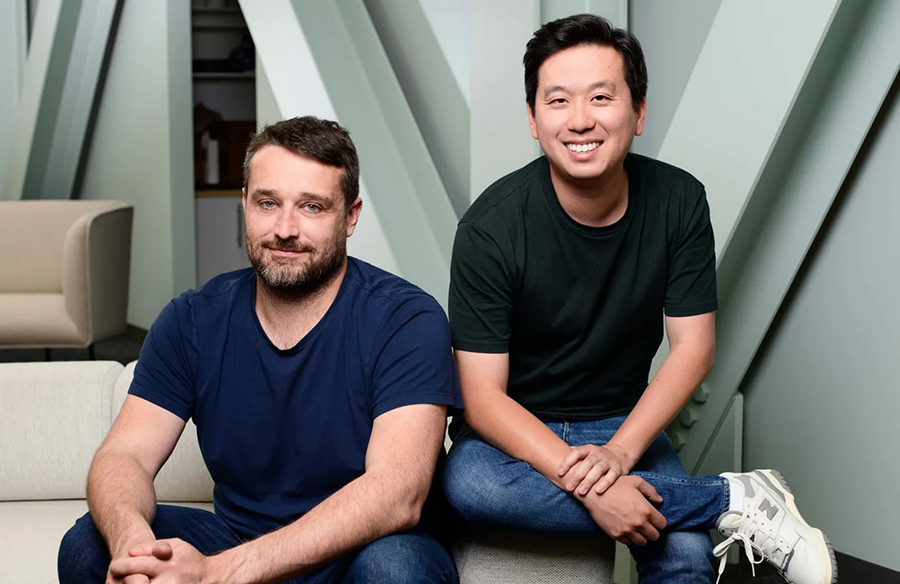

Inference.ai, founded by John Yue and Michael Yu, offers infrastructure-as-a-service cloud GPU compute through partnerships with third-party data centers. Leveraging advanced algorithms, Inference.ai matches companies’ AI workloads with available GPU resources, streamlining the process of infrastructure acquisition. By providing clarity amidst the complex hardware landscape, Inference.ai aims to enhance throughput, reduce latency, and lower costs for its clients.

Differentiating Factors and Value Proposition

Inference.ai distinguishes itself by providing customers with GPU instances in the cloud along with 5TB of object storage. Through algorithmic matching and strategic partnerships with data center operators, the platform claims to offer significantly cheaper GPU compute compared to major public cloud providers. By addressing the confusion and variability in the hosted GPU market, Inference.ai empowers decision-makers to efficiently identify the optimal infrastructure for their projects.

Competition and Investment Landscape

While Inference.ai faces competition from established players like CoreWeave and Lambda Labs, as well as emerging startups like Together, Run.ai, and Exafunction, its recent funding round indicates investor confidence in its growth potential. Backed by a $4 million investment from Cherubic Ventures, Maple VC, and Fusion Fund, Inference.ai is poised to expand its deployment infrastructure and further solidify its position in the market.

In summary, Inference.ai’s innovative approach to matching AI workloads with cloud GPU compute resources offers a promising solution to the challenges posed by GPU supply constraints. With its focus on efficiency, affordability, and accessibility, Inference.ai is well-positioned to drive the next wave of AI growth and accelerate the adoption of advanced computing technologies.